Last week, I talked about how we can approach estimating business fit with an improved level of rigor by defining the factors that would determine business fit, estimating them separately according to a scale, and averaging the results. Using this methodology enables us to apply a greater level of rigor to our estimations of the scores we assign – which leads to greater consistency and more acceptance of the scores that result. This week, I’m applying the same approach to estimating technical fit.

1. Service/Operational Level Agreement compliance

Our first component of technical fit is an obvious one. The technique of creating service level agreements (and operational level agreements) to define expected levels of performance has been around for decades, and these Service or Operational Level Agreements naturally include targets around the operation of the application. So – how well does the application meet these expected performance levels? A good approach will be to establish thresholds for the percentage of Service Level Agreement targets that are met in the timeframe.

2. Configurability

When we talked about business fit, we mentioned the importance of flexibility for an application. Business needs change all the time, so an application that can’t adapt to changing business needs is of limited use after all. But flexibility can be achieved in various ways – configuration, custom modules, integration to other applications – the question is, how easy is this? To what extent can changes be made via a GUI versus command line batch files versus coding in some proprietary language?

3. Bug fixes

How often does the application need fixes made to it? Does it tick over quietly, or are we applying patch after patch after patch? Again, we can establish a threshold to more carefully quantify this area - the number of monthly patches, for example. In order to enable ranking between applications, the threshold should be set according to the actual observed bug fixes.

4. Major incidents

The inherent stability of the application is an important factor for how well the application meets the technical environment of the organization. A good proxy for the vague concept of ‘stability’ is the number of major incidents. I’m going to argue that we should only count major incident because of their much greater and broader visibility across the organization. So – how often does the application experience a major incident?

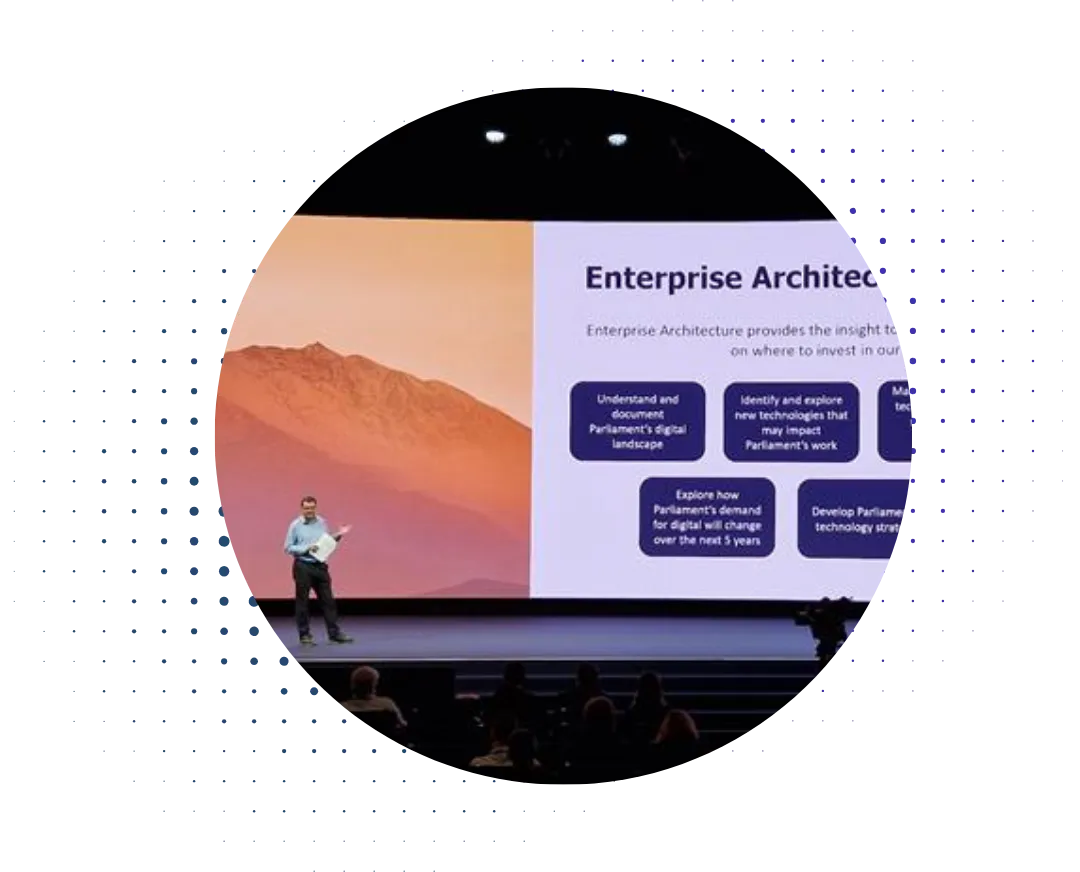

5. Architectural compliance

Last of all, our rating of the technical fit of the application should include how well it matches the architectural policies that we have in place. After all, the architectural policies exist to define a desired technical environment, so it makes a lot of sense to consider them when evaluating the technical fit of an application. So our final question is - is the application fully compliant, partially compliant to architectural policies? If not, what timeframes exist for establishing full compliance with architectural policies – is that likely to be a short term goal, long term goal, or is it simply infeasible?

As with business fit scoring, each of these factors should be scored on the same range, e.g., 0 to 5, so that the scores across all factors can be averaged.

Next week we’ll cover the last category, Risk, but until then here’s some more examples of how to create great application scorecards.

.webp)